Status: MVP delivered, 250k NIM still available

Description

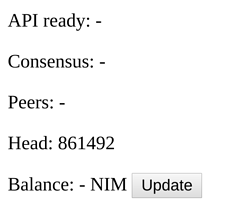

To connect to the Nimiq Network, both the Safe and Hub are including an iframe in the page from https://network.nimiq.com. This iframe starts a Nimiq browser node (client) that connects to the network. The Safe and Hub then communicate with this iframe client via RPC to update the users’ balances, listen for and send transactions. Both the Safe and Hub, and any other apps that use the Network iframe, create their own instance of the iframe and thus of the Nimiq client within.

To reduce the amount of Nimiq nodes running at the same time in a browser, and to avoid having to re-establish consensus in each Network iframe, we like to add a method of communication between iframe instances that enables sharing of consensus between multiple users of the Network iframe.

Suggested Solution

A promising idea is to use most browsers’ built-in Broadcast Channel API:

The Broadcast Channel API allows simple communication between browsing contexts (that is windows, tabs, frames, or iframes) with the same origin (usually pages from the same site). - MDN

Because the Network iframes are included from the same URL origin, it is possible for these iframes to communicate directly with each other via a Broadcast Channel.

New iframe instances would detect other, already running instances of the same iframe and would relay all requests to the iframe that already has consensus.

A deterministic means of communication between iframe instances in the channel would have to be developed so that no confusion is created as to which iframe sends and receives broadcast messages.

Resources

Network iframe source: https://github.com/nimiq/network/tree/master/src/v2 (Only v2 of the Network iframe API needs to support this communication channel).

Broadcast Channel API docs: https://developer.mozilla.org/docs/Web/API/Broadcast_Channel_API.

For ideas for cross-window communication and message formats, refer to the Nimiq RPC library: https://github.com/nimiq/rpc.

The RPC library can also be extended to support the use of broadcast channels, however to not increase the size of the RPC library for all other purposes, the channel communication library should maybe extend the RPC classes instead.

Completion Criteria

The proposal is completed when

- Two websites include the Network iframe, but only one Nimiq node is started and the second iframe uses the consensus of the first iframe.

- The code is readable and organized so that it can be accepted into the Network repository.

- If possible, the Network iframe API does not change.

Reward

Total: 1’000’000 NIM

- 750’000 NIM have be rewarded to @Chugwig for his work on a first implementation that you can find in this PR.

- 250’000 NIM are still available for code style improvements. If you’re interested in working on them, please see this message and get in touch to coordinate the next steps.

)

)

Please let us know.

Please let us know. but given the scope and ideal of the bounties it’s not something I felt was worth hunting down (though if instructions to reproduce were given I’d take a look even after receiving the reward).

but given the scope and ideal of the bounties it’s not something I felt was worth hunting down (though if instructions to reproduce were given I’d take a look even after receiving the reward).